Most companies that use FFmpeg and store their data in object storage (such as S3, Azure, Google Cloud, or on-premises solutions like MinIO) stage their data to a filesystem, then run FFmpeg on it there, and finally upload the result back into object storage. This is because FFmpeg requires POSIX file access, and existing POSIX compatibility layers such as Mountpoint and s3fs are too slow or just don’t work with FFmpeg.

This workaround is no longer necessary. By using cunoFS with FFmpeg you can cut out the staging/upload steps (and the local filesystem) and run your existing media workloads directly on files stored in object storage. You can use cunoFS to connect virtualized, containerized, or serverless compute to your object storage to burst into the cloud or replace your existing on-premises infrastructure.

Comparing S3/object storage performance and compatibility for FFmpeg workloads

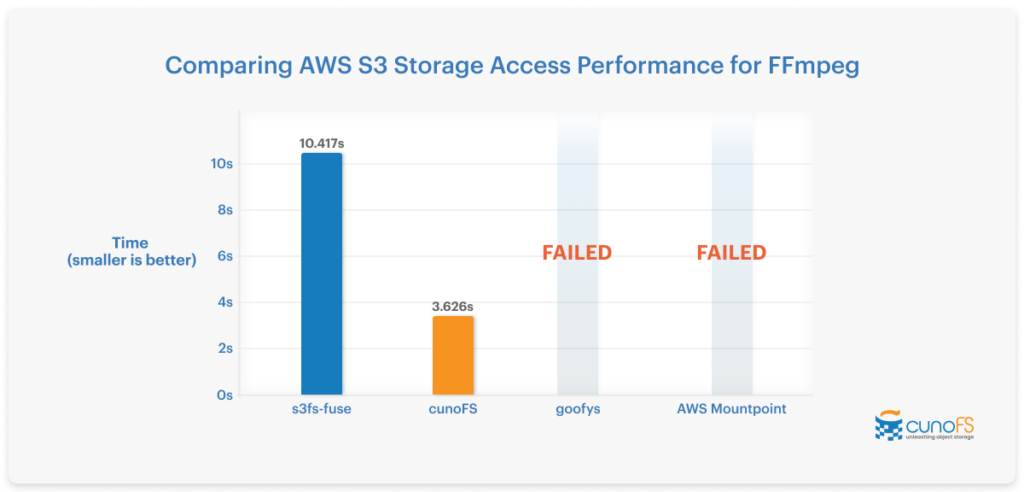

s3fs-fuse, goofys, and AWS Mountpoint all allow you to mount AWS S3 object storage as a filesystem; however, none of them provide adequate performance or compatibility for working directly on stored data. To use these you usually need to compromise the speed at which workloads are processed, or stage data to local storage for processing, a workaround that increases costs — fast cloud storage like EFS gets expensive, especially once you start scaling.

Unlike other object storage filesystem solutions, cunoFS does not require a FUSE mount or elevated user privileges, making it perfect for affordable serverless and containerized environments, automated workflows, and deploying to cost-effective spot instances. Additionally, the high level of POSIX compatibility provided by cunoFS means that you don’t need to stage data hosted in object storage to work on it, making it ideal for use in scalable environments. We also support object storage beyond S3, including Azure Blob Storage, Google Cloud Storage, and S3-compatible cloud and on-premises solutions.

FFmpeg cloud workload testing conditions

To compare the performance of FFmpeg running on AWS S3, we’re converting an Apple Quicktime .mov file (the open-source film Big Buck Bunny, which is available to download) to a standard .mp4 file. This video conversion is run using FFmpeg on a c5n.18xlarge instance in AWS.

The test compares running the conversion on the video files stored on EFS, s3fs-fuse, goofys, AWS Mountpoint, and cunoFS using the command:

| time sh -c “ffmpeg -i INPUT_FILE.mov -c copy OUTPUT_FILE.mp4 ; sync” |

FFmpeg cloud workload benchmark results

As you can see from the graph below, the results are clear: cunoFS is the best way to process data stored in object storage, providing almost 3x better performance than s3fs-fuse.

Running FFmpeg on data via AWS Mountpoint and goofys both failed outright because they only support linear/sequential writes. Many common FFmpeg operations require more than this, so you would have to resort to copying the file into local or EFS attached storage to use either of these options. cunoFS does not have this limitation and is built to intelligently handle all write/access patterns.

| Storage solution | Time to complete (lower is better) |

| cunoFS | 3.626s |

| s3fs-fuse | 10.417s |

| goofys | FAILED |

| AWS Mountpoint | FAILED |

| EFS | 4.158s |

In this test, running the command with cunoFS took 65% less time than with s3fs-fuse.

When including EFS along with the S3-based solutions, cunoFS still provides the best possible performance for cloud storage: it’s 13% faster than EFS for FFmpeg tasks, while remaining drastically more cost-effective.

Step-by-step: how to use cunoFS to run FFmpeg workloads from local, cloud, and serverless environments

Below, we’ll walk through the setup steps we took to create our FFmpeg workflow and show you how to connect to an S3 bucket using cunoFS. You can follow these steps to run your own benchmarks with your own data, or use it as the basis for processing your own FFmpeg data on object storage. The first step is to set up your S3 bucket and connect it to cunoFS:

- Create an AWS S3 bucket (or use an existing one — cunoFS works with all of your data already in S3!) and an IAM user with read/write permissions for the bucket.

- Next, set up cunoFS according to the instructions for your platform. Linux, macOS (via Docker), and Windows (via WSL) are supported.

Once you’ve installed and connected to your S3 bucket, you can enable cunoFS direct interception mode using the cuno command. This loads cunoFS in your shell, and lets you access files in your S3 buckets using commands or applications like ffmpeg, using S3 URI-style paths:

| ffmpeg -i s3://my-bucket/path/to/file/video.mov -c copy s3://my-bucket/path/to/file/converted-video.mp4 |

With cunoFS enabled, you can run FFmpeg as you usually would, supplying S3 URIs instead of filesystem paths. Everything will ”just work,” allowing you to work directly with files in S3 as if they were stored locally.

If you wish to use cunoFS in scripts or automated environments where you cannot launch direct interception mode, you can add it to your shell profile or enable automatic interception in containerized environments.

Further flexibility and cost efficiency with cunoFS + FFmpeg + AWS Lambda

Serverless workflows including AWS Lambda are attractive because they can be automated to create highly scalable and cost-effective environments: when new data is available to process, a workflow can be automatically triggered, and you only pay for the compute it takes for that workflow to complete.

AWS’ own example of FFmpeg in Lambda functions is limited to making sequential writes to S3 and only supports mpegts formats. This leads to poor compression and severely limits the number of compatible devices that can play back processed files. By using cunoFS instead, you unlock all FFmpeg functionality and formats for AWS Lambda (feel free to contact us and we’ll share our AWS Lambda example code!).

cunoFS allows you to take your existing scripts and workflows and start running them unmodified on S3-hosted data. It keeps you in control of your data and doesn’t scramble or otherwise modify your files, and our support for multiple object-storage back ends means you can always move your data to another cloud (or even back to your local infrastructure) at any time.

Download cunoFS for free evaluation and personal use, or contact us at sales@cuno.io if you have any enquiries or questions.