With cunoFS, you can mount S3 buckets and access objects inside them as files. This makes it possible to use almost any application with S3 storage, including custom software and machine learning tools like PyTorch, media tools like FFmpeg, and high-performance computing workloads.

cunoFS has faster performance than every competing solution to mounting S3-compatible object storage in the filesystem. It is easy to set up, and has the ability to optionally preserve POSIX metadata such as file and folder permissions, timestamps, symlinks, and hardlinks, that would otherwise be lost in an S3 bucket. You can use cunoFS with multiple buckets — for example, for copying items objects between buckets — and even with different object stores, for example, S3, Azure Blob Storage, Google Cloud Storage, and on-premises object storage like minIO and Dell ECS.

cunoFS works on Linux (including in containerized and serverless environments) and is available under WSL in Windows and via virtualization on macOS.

Steps to mount an S3 bucket as a local drive with cunoFS

Here’s how you can mount an S3 bucket as a local drive with cunoFS.

1. Download and install cunoFS: Go to the cunoFS Downloads page, then follow the installation instructions for your platform.

2. Add your AWS credentials to cunoFS: Create an IAM user with read and write permissions for your S3 bucket and save the AWS credentials to a text file, for example, credentials.txt, with the following format:

aws_access_key_id = xxxxxxxxxxxxxxxxxx |

Then, import these credentials into cunoFS:

cuno creds import credentials.txt |

Any buckets that the IAM user has access to will be automatically added to cunoFS:

– Examining credential file.. |

For advanced ways of authenticating to AWS, as well as authentication for other cloud storage platforms, check out the Authentication documentation page.

3. Mount your bucket as a local directory: Create a directory and use the cuno mount command to mount all of your configured buckets in it:

mkdir ~/my-object-storage |

Now, any S3 bucket or other object storage you configure in cunoFS will be available under that filesystem path. You can confirm this by listing the contents of your S3 bucket:

ls ~/my-object-storage/s3/<bucket>/<path> |

If you need to mount your bucket, or a folder in your bucket, to a single local path (without the s3 subdirectory), you can use the –root option for the cuno mount command:

cuno mount –root s3://<bucket>/<folder> /mnt/local_mount_path |

You can also set up your mount to preserve POSIX metadata by using the –posix option, so that file and folder permissions, timestamps, symlinks, and hardlinks are all retained when your data is stored on S3.

4. Launch applications and access files: You can now work with the bucket using any application. For example, you could create a new file in your S3 bucket and edit it:

touch ~/my-object-storage/s3/<bucket>/<path>/myfile.txt |

Increase performance further with cunoFS Direct Interception mode

By default, cunoFS operates in Direct Interception mode. This is accessed by running the cuno command to enable the cunoFS extensions in your shell. From there you can access your S3 bucket using URI-style paths, without having to use a FUSE mount at all:

touch s3://<bucket>/<path>/myfile.txt

This is our most performant option, offering significant performance improvements even over our already fast FUSE option. We also offer FlexMount, allowing you to use Direct Interception and fall back to FUSE mounts when necessary. For machine learning specifically, cunoFS has an additional tool that makes data access with cunoFS even faster. Check out the PyTorch accelerator package for more details.

Other tools can mount an S3 bucket, but they are slow

Changing a large number of files is slow and impractical using the AWS CLI or S3 SDK: you need to update your scripts to use the AWS CLI or S3 SDK to download and re-upload each file every time something changes. For some applications, especially ones that read and write large files, changing to object storage is complicated and time-consuming. A common way to sidestep this problem is to mount your S3 bucket as a local drive, so that you can keep using your applications as they are.

There are many other tools that can mount S3 storage as a local drive, including s3fs, mount-s3, and goofys. The problem with these tools is that, while they do work, their performance is very slow, making them unsuitable for regular use with file-based applications.

cunoFS has a faster FUSE mount implementation than its alternatives, including s3fs, goofys, and enterprise solutions. It also provides native-filesystem access to S3 storage using Direct Interception mode, which is even faster.

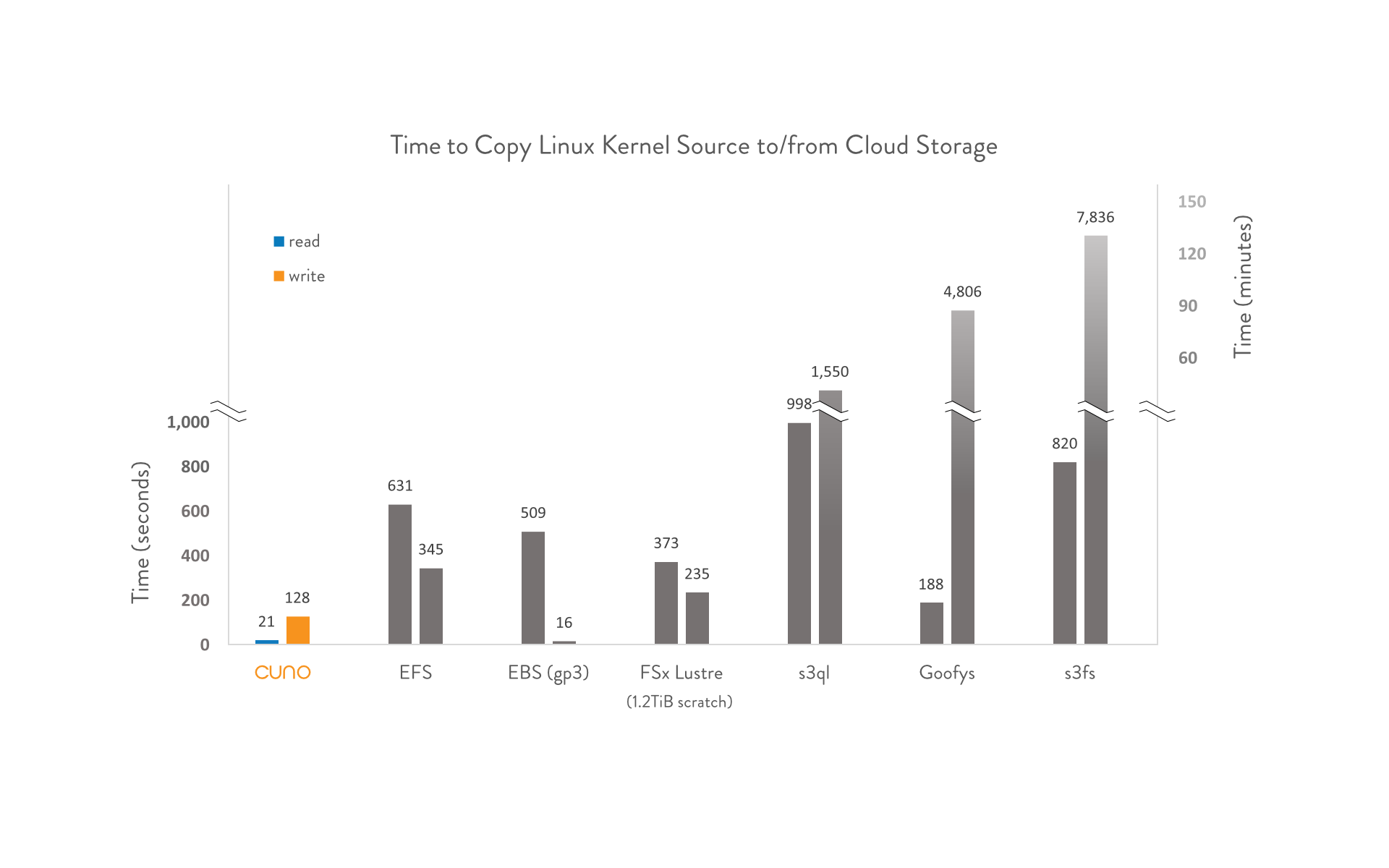

Here is a comparison of upload duration using cunoFS versus other solutions; in this case we’re reading and writing the Linux kernel source files (74999 files, 1.21 GiB) from and to S3:

These results demonstrate that cunoFS is significantly faster when used in Direct Interception mode than all competing solutions (including EFS!):

- s3fs — cunoFS is 61x faster

- goofys — cunoFS is 38x faster

- s3ql — cunoFS is 12x faster

- FSx Lustre — cunoFS is 2x faster (18x faster on read operations)

- EFS — cunoFS is 2.7x faster (30x faster on read operations)

Get started with cunoFS today

FAQ

Yes, all S3 buckets will be available under the path that you specify using the cuno mount command .

If you have multiple buckets, you can use cunoFS to move objects between them by simply using the cp command from Direct Intercept mode:

(cuno) $ cp ~/my-object-storage/s3/bucket-1/*.* ~/my-object-storage/s3/bucket-2/ |

Is it possible to mount other object storage types?

Yes, cunoFS supports Amazon S3, Azure Blob Storage, Google Cloud Storage, and any other S3-compatible object storage, both cloud-based and local, including minIO, Dell ECS, and NetApp StorageGRID.

What kinds of files does cunoFS work with on S3?

cunoFS supports any file or directory structure and contents that can be stored on S3, including specialized workloads such as HPC applications, scientific computing, and machine learning applications. If it’s required for your workflow, cunoFS can retain POSIX metadata as well.