When choosing the EFS (Elastic File System) configuration options for your enterprise cloud storage, the choices can be overwhelming. Practical information can be hard to find, so we’ve assembled all of the relevant facts in one place, along with additional information that will help you pick the right mix of price and performance for your use case.

This article explains the EFS configuration parameters that affect price and performance (storage classes, performance modes, and throughput modes), discusses when S3 object storage, FSx for Lustre, and EBS (Elastic Block Store) are suitable alternatives to EFS.

Different AWS storage types

EFS (Elastic File System) — This filesystem-based storage is elastic, so can scale in size according to your needs. It scales up when you add files and down when you remove files, without disturbing your workloads or needing you to intervene to provision more storage.

S3 (Simple Storage Service) — S3 provides object storage, meaning that all files are structured in a flat plane, rather than the hierarchical structure of a standard filesystem. You can pair S3 with open-source software s3fs and goofys — these run on top of S3 so that you can interact with S3 like a native filesystem instead of an object storage API, but they suffer performance limitations.

EBS (Elastic Block Store) — This is a block storage service that stores data in blocks of equal size. EBS volumes must be attached to EC2 (Elastic Compute Cloud) instances, so are less scalable than other options. Typically this is not shared storage that multiple instances can simultaneously attach to.

FSx for Lustre — This is designed on top of the Lustre filesystem, which is a parallel filesystem used for high-performance workloads.

cunoFS acts as a high-performance layer on top of your S3 bucket. Like s3fs, Mountpoint and goofys, it also allows you native filesystem access to S3, but it also provides much greater performance and full POSIX compatibility. You can also use the cunoFS Fusion option to combine S3 with either EFS or FSx Lustre, giving both ultra-high throughput (served from S3) and high IOPS (served from EFS/FSx Lustre) as needed, at a fraction of the cost of using EFS or FSx Lustre alone.

Price vs. performance for EFS options and alternatives — summary

EFS options are the configuration settings you specify that affect the price and performance attributes of your EFS storage. They include storage classes, throughput modes, and performance modes.

EFS alternatives are alternative storage mediums within the AWS ecosystem that may (or may not, depending on your use case) provide better cost or performance if used instead of EFS.

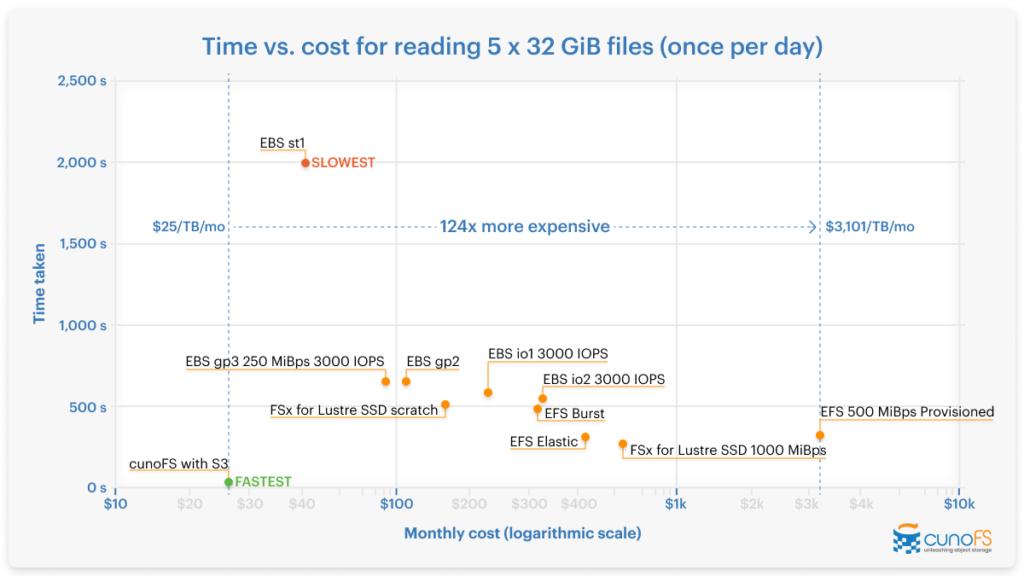

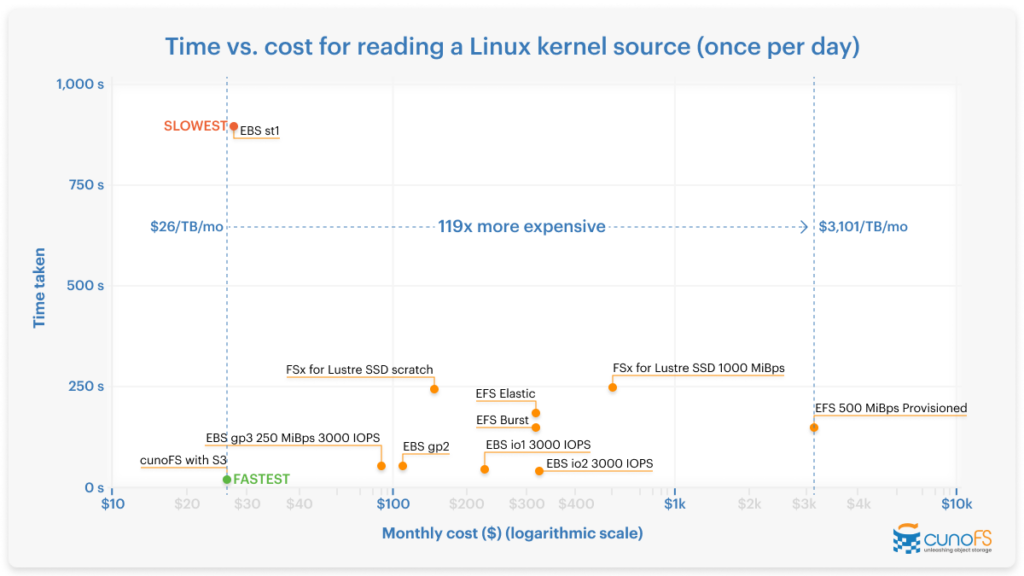

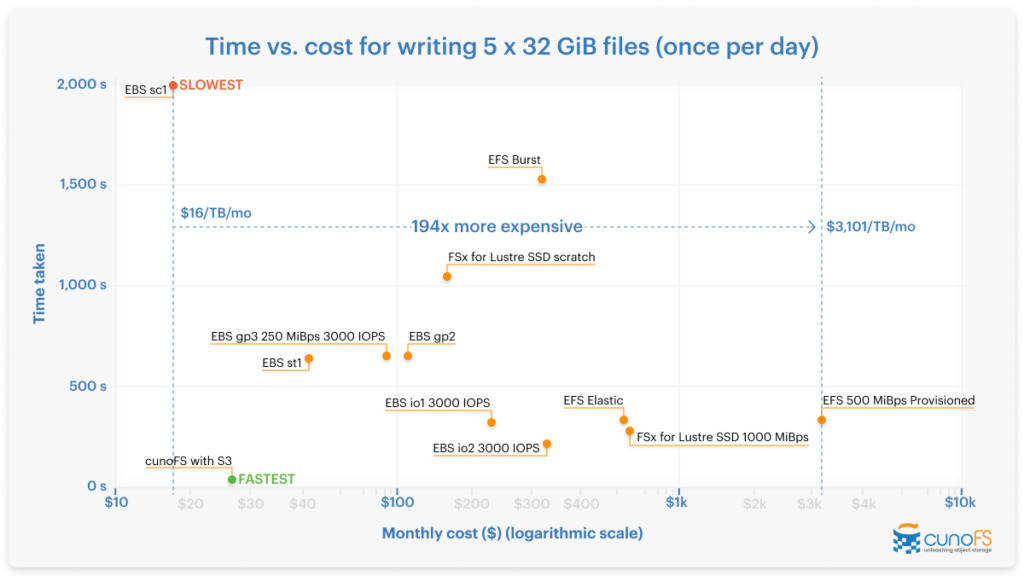

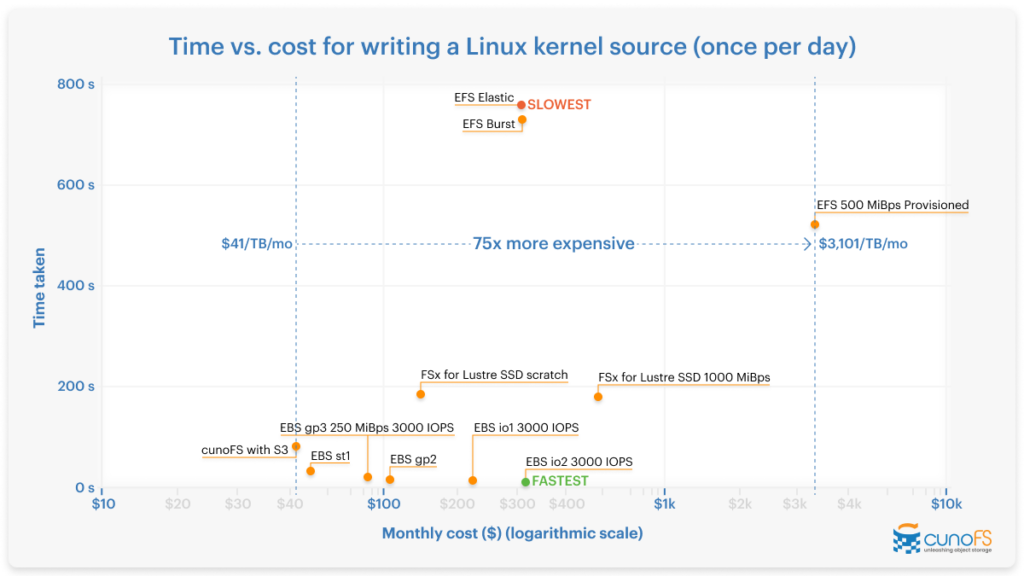

The below graphs summarize which options and alternatives are fastest and which are cheapest, and can help you see visually which options are best for your use case. If you want to replicate our results, you’re welcome to use our benchmarking suite.

All our metrics (for EBS, EFS, FSx Lustre and S3) are based on the following assumptions:

- 1 TB of data is stored for a month (30 days)

- EBS volumes must be attached to EC2 instances in order to be used — we used c5n.18xlarge

- AWS Region used is us-east-2 (Ohio)

- Once per day, the following operations are run:

- Copy 5 x 32 GiB files into RAM

- Copy a Linux kernel source (74999 files) into RAM (1.21 GiB)

- Copy 5 x 32 GiB files from RAM into the storage system

- Copy a Linux kernel source (74999 files) from RAM into the storage system (1.21 GiB)

- As these operations are run once per day, we have not included cold storage or Infrequent Access options in our metrics (as these options don’t make sense for data that needs to be accessed regularly).

- Monthly cost includes the cost of running each set of copy operations once per day for a month, plus the monthly storage fee for 1 TB of data. It does not include compute costs.

- The performance is the time taken per day to run one set of copy operations.

- For cunoFS performance was measured in Direct mode to S3 only, without cunoFS Fusion. Costs are the AWS costs only, and assume a free (personal/academic) cunoFS license.

- All results were benchmarked in December 2023 using version 1.0.2 of the benchmarking suite.

Later on, we provide our full table of metrics for one copy operation per day, as well as a table of metrics for ten copy operations per day, to show how timings can change for larger volumes of data. This will help you decide between the different EFS options and its alternatives (within the AWS ecosystem).

Later in this article, the behavior and use cases for each option will be explained in detail.

Start using cunoFS today

Graphs of price vs. performance

Tables of price vs. performance

Metrics based on one copy operation per day for a month

| Read | Write | ||||||||

| Copy 5 x 32 GiB into RAM disk | Copy Linux kernel source into RAM disk | Copy 5 x 32 GiB into storage system | Copy Linux kernel source into storage system | ||||||

| EFS (default)

Storage:Standard Throughput: Elastic Performance: General Purpose |

Duration (seconds) |

327s | 186s | 330s | 756s | ||||

|

Monthly cost ($) |

$477 | $323–$324 | $631 | $324–$326 | |||||

EFS Storage: Standard Throughput: Bursting Performance: General Purpose | Duration

| 494s | 150s | 1529s | 727s | ||||

Monthly cost ($) | $322 | ||||||||

EFS Storage: Standard Throughput: Provisioned* Performance: General Purpose *Provisioned at 500 MiBps, which is the maximum throughput for a single EFS-NFS mount | Duration (seconds) | 327s | 149s | 338s | 519s | ||||

Monthly cost ($) | $3101 | ||||||||

EFS Storage: OneZone Throughput: Bursting Performance: General Purpose Durations are estimated. | Duration (seconds) | 494s | 150s | 1529s | 727s | ||||

Monthly cost ($) | $172 | ||||||||

EFS Storage: OneZone Throughput: Elastic Performance: General Purpose Durations are estimated. | Duration (seconds) | 327s | 186s | 330s | 756s | ||||

Monthly cost ($) | $326 | $173–$174 | $481 | $174–$176 | |||||

EFS Storage: OneZone Throughput: Provisioned* Performance: General Purpose Durations are estimated. *Provisioned at 500 MiBps, which is the maximum throughput for a single EFS-NFS mount

| Duration (seconds) | 327s | 149s | 338s | 519s | ||||

Monthly cost ($) | $2951 | ||||||||

EBS (gp3) General Purpose SSD volume (gp3) Provisioned with 0.25 GiBps (vs default 0.125 GiBps) and 3000 IOPS (default) in order to match gp2. | Duration (seconds) | 653s | 54s | 655s | 10s | ||||

Monthly cost ($) | $91 | ||||||||

EBS General Purpose SSD volume (gp2) | Duration (seconds) | 653s | 54s | 655s | 7s | ||||

Monthly cost ($) | $107 | ||||||||

EBS Provisioned IOPS SSD volume (io2) Provisioned with 3000 IOPS to match gp2. Not measured with io2 Block Express (EC2 c5n unsupported). | Duration (seconds) | 544s | 41s | 219s | 5s | ||||

Monthly cost ($) | $329 | ||||||||

EBS Provisioned IOPS SSD volume (io1) Provisioned with 3000 IOPS to match gp2. | Duration (seconds) | 584s | 46s | 328s | 6s | ||||

Monthly cost ($) | $211 | ||||||||

EBS Throughput Optimized HDD volume (st1) | Duration (seconds) | 1997s | 898s | 643s | 26s | ||||

Monthly cost ($) | $48 | ||||||||

EBS Cold HDD volume (sc1) | Duration (seconds) | 1995s | 657s | 1997s | 81s | ||||

Monthly cost ($) | $16 | ||||||||

| FSx for Lustre (all options) | Duration (seconds) | 278-525s | 224-292s | 287-1046s | 145-186s | ||||

Monthly cost ($) | $150-644 | ||||||||

FSx for Lustre SSD Scratch | Duration (seconds) | 525s | 245s | 1046s | 182s | ||||

Monthly cost ($) | $150 | ||||||||

FSx for Lustre SSD 125 MBps | Duration (seconds) | 327s | 224s | 330s | 149s | ||||

Monthly cost ($) | $178 | ||||||||

FSx for Lustre SSD 250 MBps | Duration (seconds) | 320s | 228s | 324s | 153s | ||||

Monthly cost ($) | $225 | ||||||||

FSx for Lustre SSD 500 MBps | Duration (seconds) | 306s | 234s | 312s | 162s | ||||

Monthly cost ($) | $365 | ||||||||

FSx for Lustre SSD 1000 MBps | Duration (seconds) | 278s | 248s | 287s | 178s | ||||

Monthly cost ($) | $644 | ||||||||

FSx for Lustre HDD 12 MBps | Duration (seconds) | 375s | 245s | 439s | 186s | ||||

Monthly cost ($) | $154 | ||||||||

FSx for Lustre HDD 12 MBps with SSD cache | Duration (seconds) | 309s | 251s | 370s | 150s | ||||

Monthly cost ($) | $252 | ||||||||

FSx for Lustre HDD 40 MBps | Duration (seconds) | 382s | 234s | 420s | 145s | ||||

Monthly cost ($) | $153 | ||||||||

FSx for Lustre HDD 40 MBps with SSD cache | Duration (seconds) | 293s | 216s | 377s | 130s | ||||

Monthly cost ($) | $182 | ||||||||

cunoFS with S3 * *Does not include license fee for commercial use | Duration (seconds) | 29s | 18s | 37s | 79s | ||||

Monthly cost ($) | $25 | $26 | $26 | $41 | |||||

Metrics based on ten copy operations per day for a month

For this table, monthly cost includes the cost of running each set of copy operations ten times per day for a month, plus the monthly storage fee for 1 TB of data. It does not include compute costs. The performance is the time taken per day to run ten sets of copy operations.

Please note that the data for ten copies per day is based on estimated values, extrapolated from the costs of the single copy per day data, taking into account the cost per GB of each system, burst credits where relevant, and assuming that the storage system is idle for 24 hours between each run.

| Read | Write | ||||||||

| Copy 5 x 32 GiB into RAM disk (ten times) | Copy Linux kernel source into RAM disk (ten times) | Copy 5 x 32 GiB into storage system (ten times) | Copy Linux kernel source into storage system (ten times) | ||||||

EFS (default) Storage:Standard Throughput: Elastic Performance: General Purpose | Duration (seconds) | 3271s | 1863s | 3304s | 7562s | ||||

Monthly cost ($) | $1868 | $334–$343 | $3414 | $346–$363 | |||||

EFS Storage: Standard Throughput: Bursting Performance: General Purpose | Duration (seconds) | 4939 | 1496 | 15292 | 7267 | ||||

Monthly cost ($) | $322 | ||||||||

EFS Storage: Standard Throughput: Provisioned* Performance: General Purpose *Provisioned at 500 MiBps, which is the maximum throughput for a single EFS-NFS mount | Duration (seconds) | 3274 | 1487 | 3375 | 5186 | ||||

Monthly cost ($) | $3101 | ||||||||

EFS Storage: OneZone Throughput: Bursting Performance: General Purpose Durations are estimated. | Duration (seconds) | 4939 | 1496 | 15292 | 7267 | ||||

Monthly cost ($) | $172 | ||||||||

EFS Storage: OneZone Throughput: Elastic Performance: General Purpose Durations are estimated. | Duration (seconds) | 3271 | 1863 | 3304 | 7562 | ||||

Monthly cost ($) | $1718 | $183–$192 | $3264 | $195–$293 | |||||

EFS Storage: OneZone Throughput: Provisioned* Performance: General Purpose Durations are estimated. *Provisioned at 500 MiBps, which is the maximum throughput for a single EFS-NFS mount | Duration (seconds) | 3274 | 1487 | 3375 | 5186 | ||||

Monthly cost ($) | $2951 | ||||||||

|

EBS (gp3) General Purpose SSD volume (gp3) Provisioned with 0.25 GiBps (vs default 0.125 GiBps) and 3000 IOPS (default) in order to match gp2 |

Duration (seconds) |

6535s | 541s | 6550s | 96s | ||||

|

Monthly cost ($) |

$91 | ||||||||

|

EBS General Purpose SSD volume (gp2) |

Duration (seconds) |

6534s | 541s | 6552s | 65s | ||||

|

Monthly cost ($) |

$107 | ||||||||

|

EBS Provisioned IOPS SSD volume (io2) Provisioned with 3000 IOPS to match gp2. Not measured with io2 Block Express (EC2 c5n unsupported). |

Duration (seconds) |

5445s | 409s | 2194s | 55s | ||||

|

Monthly cost ($) |

$329 | ||||||||

|

EBS Provisioned IOPS SSD volume * (io1) * provisioned with 3000 IOPS to match gp2 |

Duration (seconds) |

5845s | 462s | 3276s | 55s | ||||

|

Monthly cost ($) |

$211 | ||||||||

|

EBS Throughput Optimized HDD volume (st1) |

Duration (seconds) |

59276s | 8979s | 19080s | 262s | ||||

|

Monthly cost ($) |

$48 | ||||||||

|

EBS Cold HDD volumes (sc1) |

Duration (seconds) |

62351s | 6574s | 62394s | 808s | ||||

|

Monthly cost ($) |

$16 | ||||||||

| FSx for Lustre (all options) | Duration (seconds) | 2776-5252s | 2242-2925s | 2873-10461s | 1445-3769s | ||||

Monthly cost ($) | $150-644 | ||||||||

FSx for Lustre SSD Scratch |

Duration (seconds) |

5252s | 2448s | 10461s | 1822s | ||||

|

Monthly cost ($) |

$150 | ||||||||

FSx for Lustre SSD 125 MBps |

Duration (seconds) |

3266s | 2242s | 3298s | 1489s | ||||

|

Monthly cost ($) |

$178 | ||||||||

FSx for Lustre SSD 250 MBps |

Duration (seconds) |

3196s | 2275s | 3237s | 1531s | ||||

|

Monthly cost ($) |

$225 | ||||||||

FSx for Lustre SSD 500 MBps |

Duration (seconds) |

3056s | 2342s | 3116s | 1616s | ||||

|

Monthly cost ($) |

$365 | ||||||||

FSx for Lustre SSD 1000 MBps |

Duration (seconds) |

2776s | 2477s | 2873s | 1784s | ||||

|

Monthly cost ($) |

$644 | ||||||||

FSx for Lustre HDD 12 MBps |

Duration (seconds) |

3750s | 2450s | 4388s | 1863s | ||||

|

Monthly cost ($) |

$154 | ||||||||

FSx for Lustre HDD 12 MBps with SSD cache |

Duration (seconds) |

3088s | 2513s | 3696s | 1503s | ||||

|

Monthly cost ($) |

$252 | ||||||||

FSx for Lustre HDD 40 MBps |

Duration (seconds) |

3820s | 2342s | 4198s | 1445s | ||||

|

Monthly cost ($) |

$153 | ||||||||

FSx for Lustre HDD 40 MBps with SSD cache |

Duration (seconds) |

2925s | 2163s | 3769s | 1298s | ||||

|

Monthly cost ($) |

$182 | ||||||||

cunoFS with S3 * *Does not include license fee for commercial use | Duration (seconds) | 288s | 175s | 373s | 792s | ||||

Monthly cost ($) | $27 | $34 | $35 | $191 | |||||

EFS storage classes explained

An EFS storage class refers to the specification of the hardware your data will be stored on. EFS offers several different storage classes that are targeted at different price, performance, and availability requirements, so it’s important to understand them before deciding on which to use.

Choosing a storage class

- EFS Standard (default): This is the highest-performance EFS storage class and is available in multiple Availability Zones.

- EFS Standard Infrequent-Access: Like EFS Standard, this is also available in multiple Availability Zones. Storage costs are cheaper than EFS Standard, but you have to pay to access your data, so this option only makes sense if the data you store needs to be accessed less than once every two months.

- EFS OneZone: This has the same high performance as EFS Standard, but is only available in one Availability Zone. It’s 47% cheaper than EFS Standard, so it’s worth choosing if you can deal with having your data in just one Availability Zone.

- EFS OneZone Infrequent-Access: Similar to EFS Standard Infrequent-Access, this is the Infrequent-Access version of EFS OneZone — with cheaper storage costs but extra costs for accessing data. Like EFS Standard Infrequent-Access, it only makes sense if you need to access your data less than once every two months.

- EFS Intelligent-Tiering: This solution will automatically move your files between the EFS Standard and EFS Infrequent-Access options, based on its detection of how often you are accessing your files. AWS’ “Intelligent” tiering is actually dumb in many ways — it’s a blunt instrument that won’t necessarily save you more money than just evaluating for yourself when to copy data between the different options.

EFS throughput modes explained

The EFS throughput modes determine how much throughput your storage system will have and how varied it can be. “Throughput” in this case means how fast files can be read from or written to EFS storage.

Choosing a throughput mode

- Elastic (default): This uses a pay-as-you-go model (where you pay for reads, writes, and other I/O operations as they happen). Elastic mode is scalable, so it’s ideal for spiky workloads or when you don’t know in advance what level of performance is required. Elastic mode is the highest throughput mode and offers the fastest IOPS for larger file sizes at scale.

- Bursting: Bursting throughput mode means that I/O will be included in your fixed monthly storage pricing, making pricing more predictable than Elastic mode. Bursting mode works by accumulating Burst Credits while I/O is low and consuming them when I/O is high. The rate of accumulation of Burst Credits scales with the amount of storage in your filesystem, making it appropriate for spiky workloads. However, it is generally not as performant as Elastic mode.

- Provisioned: This mode requires you to fix your throughput limit to a specified amount. You should only use this mode if you know in advance the performance you need, or if the performance needed is fairly consistent throughout your workload.

EFS performance modes

EFS performance modes are used to specify what type of performance is most important to your use case. There are only two options, so you will need to decide whether you will prioritize very low latency or higher I/O.

Choosing an EFS performance mode

- General Purpose (default): This mode has the lowest latency and is generally the faster option unless you have very high I/O loads.

- Max I/O: This is designed for highly parallelized workloads that can tolerate higher latencies. Note that this mode can’t be used with the EFS OneZone storage classes or the Elastic throughput mode.

EFS alternatives — other AWS storage options

You may be tempted to use another of the AWS storage options to save costs over EFS. This can be advantageous for many use cases but, if you are not careful, can result in your setup becoming technical debt that needs to be corrected later on. You need to consider the differences in how EFS alternatives such as S3 work, as well as the performance implications of your choice.

Amazon S3

- S3 Standard (Default): This is the fastest regular S3 storage class. With S3 Standard storage, you can access your data quickly compared to most other S3 storage options, as it’s not cold storage. S3 Standard is a suitable option if you need to access your data relatively quickly at an affordable price across multiple Availability Zones.

- S3 Standard-Infrequent Access: Like the EFS Infrequent-Access options, this is useful when you have data that you don’t access frequently. However, when you do need to access your data you can still access it at the same speed as S3 Standard storage. This option works out cheaper than S3 Standard providing you access your data less than once every two months.

- S3 One Zone-Infrequent Access: This is 20% cheaper than S3 Standard-Infrequent Access but it means your data will only be available in one Availability Zone. This is a good option if you want a cheap way to store secondary backups.

- S3 Glacier Instant Retrieval: This is a type of cold storage, which means data retrieval is slower than the above options. However, it works out 68% cheaper than S3 Standard-Infrequent Access if you only access your data once per quarter. It’s worth noting that this is the fastest of the cold storage options.

- S3 Glacier Flexible Retrieval: This is up to 10% cheaper than S3 Glacier Instant Retrieval but can only be accessed 1–2 times per year. It’s also slower to access data than Glacier Instant Retrieval.

- S3 Glacier Deep Archive: This is the lowest-cost S3 storage tier. Retrieval is even slower than with the Flexible Retrieval tier; however, its main use is for highly-regulated industries that need to retrieve data only occasionally and are not affected by performance speeds.

- S3 Express One Zone: This is a special low-latency S3 tier built for high IOPS workloads. Latencies are reduced by up to 10x, but it costs 7-8x as much per month as S3 Standard for storage. Read and write IOPS are half the cost, meaning that very high IOPS workloads may recover the steeper base costs. The data is also only accessible within a single Availability Zone. Most applications that can use S3 may need to be rewritten for S3 Express One Zone, and many existing S3-native applications do not work with it.

S3 standard has higher latencies than EFS however can deliver much higher throughput. Another downside compared to EFS is that S3 is object storage so you can’t interact with your files using the filesystem — you’ll have to adapt your applications to use the S3 API instead, which can require significant work.

While there are open-source packages that allow you to work with object storage as a filesystem (such as s3fs and goofys), these FUSE-based solutions are still not performant enough for most use cases.

FSx for Lustre

FSx for Lustre is a managed AWS service based on the Lustre filesystem. Lustre was developed for highly parallel workloads. Because of this, FSx for Lustre provides very high throughput for parallel workloads, and can often also work out cheaper than EFS. Unlike EFS and S3, the size of the paid storage includes free space for writing new files, and thus needs to be pre-provisioned and paid for. The provisioned filesystem size can be increased but not decreased, and results in the filesystem being unavailable for a few minutes.

Amazon EBS

Amazon EBS offers various options for price, performance and durability with its different volume types.

- gp3 (EBS General Purpose SSD volume): This type of volume works well for transactional databases or other projects that require a lot of fast reads and writes.

- gp2 (EBS General Purpose SSD volume): This is a precursor to gp3 — and as gp3 offers better performance at a lower price, there is no reason to choose this volume type any more. You should always choose gp3 over gp2.

- io1 (EBS Provisioned IOPS SSD volume): This has the same throughput as gp3, but has better IOPS. You should upgrade to this if you’re using gp3 and experiencing IOPS bottlenecks or if you have I/O-intensive database workloads.

- io2 (EBS Provisioned IOPS SSD volume): This has the same performance as io1 but is more durable — it has 99.999% durability compared to the 99.8% – 99.9% durability of io1.

- io2 Block Express (EBS Provisioned IOPS SSD volume): This is the fastest volume. You should use it if you need sub-millisecond latency as it’s four times as fast as io2 with equal durability.

- st1 (EBS Throughput Optimized HDD volume): This has similar throughput speeds to the General Purpose SSD volumes but its IOPS is much lower. Use this if throughput and cost are important but fast IOPS speeds are not critical — for example, for analytic workloads like data warehouses or log processing. It’s less than half the cost of the General Purpose SSD volumes so is excellent value for money.

- sc1 (EBS Cold HDD volumes): This is the cheapest EBS volume type. It has around half the IOPS and throughput of st1. Use this to keep costs down for your less frequently accessed data.

EBS volumes must be attached to EC2 instances to be accessed and there are some restrictions to this. Generally speaking, volumes can only be attached to a single EC2 instance; however, you can use EBS Multi-Attach to attach a volume (io1 or io2) to up to 16 instances if they’re in the same Availability Zone provided you have special storage software that can handle write synchronisation between instances, or if they are attached as read-only. This makes EBS a poor replacement for EFS if you have larger projects that require reading and writing from multiple instances or from different locations.

A common problem EBS users encounter is the strict limits to how much you can parallelize your workloads to gain performance improvements (even using EBS Multi-Attach, the limit is 16). With EFS there is no limit on the number of instances that can be run in parallel, meaning that EBS is not a match for EFS’s throughput for larger workloads.

cunoFS — a high-performance scalable EFS alternative, backed by Amazon S3

cunoFS is a storage solution that presents a high-performance POSIX-compliant filesystem that runs on top of AWS S3, allowing you to access S3 object storage as if it were a native filesystem, with much higher performance than using the S3 API/CLI or FUSE-based solutions like s3fs, Mountpoint, and goofys.

cunoFS was originally developed for the pharmaceutical industry (specifically for use in genomics), where large volumes of data need to be stored in a cost-effective manner, while remaining accessible for high-performance use cases such as bioinformatics analysis and machine learning. cunoFS has been so effective that PetaGene is making it publicly available so that other industries can benefit.

cunoFS has the highest throughput of all the listed EFS options and alternatives, including FSx for Lustre.

cunoFS also includes cunoFS Fusion — a deployment configuration for cunoFS that combines high-IOPS EFS storage with high-throughput, affordable S3 storage. This is ideal for situations where you need high IOPS as well as high throughput.

cunoFS Fusion works by automatically moving data between EFS and S3 depending on access frequency and application behavior — providing the best of both worlds when it comes to price and performance. In the production deployments we’ve observed, we’ve seen EFS usage reduced by 80%, with more affordable S3 storage making up the difference, providing significant savings.

Which AWS storage solution should I choose?

Now you’re aware of the key facts and benefits of each different EFS option (and other AWS alternatives) you’re in a good position to determine which is best for your use case.

EFS is a generally fast-performing filesystem; however, it will not perform so well if you need to write lots of small files. Within the different EFS options, the Elastic Provisioned option is the highest-performing one, however it’s also the most expensive so you have to decide if it’s worth it to pay the extra cost.

If you’re reading or writing large files, or reading many smaller files, cunoFS is the clear winner on both performance and cost.

When it comes to writing many smaller files, the fastest option is EBS io2. However, it’s worth noting that EBS is not really designed to be a distributed file system — the usual way to use it is to attach it to a single EC2 instance at a time. cunoFS with S3 is the next fastest option for writing lots of small files, and it doesn’t have limitations on the number of instances accessing the data or the instance types it can be used on.

cunoFS is the best option when you need high performance combined with lower cost — it has much higher throughput than either using S3 alone or other FUSE-based options. If you need high IOPS for very large files, cunoFS Fusion will provide the best of both worlds by giving you the high IOPS of EFS or FSx Lustre, and the ultra-high throughput and lower costs of S3.

Overall, for most use cases, cunoFS will be faster and more affordable than the alternatives. You can download cunoFS and try it out right away.